The Case for Secrets as Code: Stop Click-Managing Secrets

We live in the age of software as a service, secret keys are everywhere. Yet somehow it is still normal to click-manage secrets. Smart people share them via signal,smarter people, share them via a password manager like Bitwarden. Super-advanced people punch them into GitHub UI and only let github actions have access to secrets. Infra-devops people spin up Vault or AWS secret manager or similar to inject secrets. In all situations secrets have a lifecycle that is completely disconnected from code, causing the two to get out of sync or worse. ...

Stupid WASM Hack to Get Native Binaries

I relax by programming. It’s especially satisfying to play with something that changes how I think about a topic. I once discovered eget and realized that most modern software ships as a single binary attached to a GitHub release. Recently my friend, David, had a further realization that what would make eget better is if it itself was easier to get (ha!). I thought it would be delightful to have a WASI eget cli to fetch native binaries. These days almost everything cross-compiles trivially to WASI command modules. Those are basically little unix cli apps that have a small footprint and can run anywhere (something Java never achieved). ...

Focus and Context and LLMs

I decided to write down some thoughts on agentic coding and why it’s a very hyped wrong turn. Let me start with some background on my LLM experience. I adopted LLMs into my work in Aug 2020. I was sold when I saw that GPT-3 could generate usable SQL statements. Something that used to take 4-8 hours of RTFMing, now took 15min. I have since worked on chatcraft.org, various RAG frameworks, etc. I use aider heavily for work, frequently switch models, have been struggling with tool calling during dark ages before MCP. ...

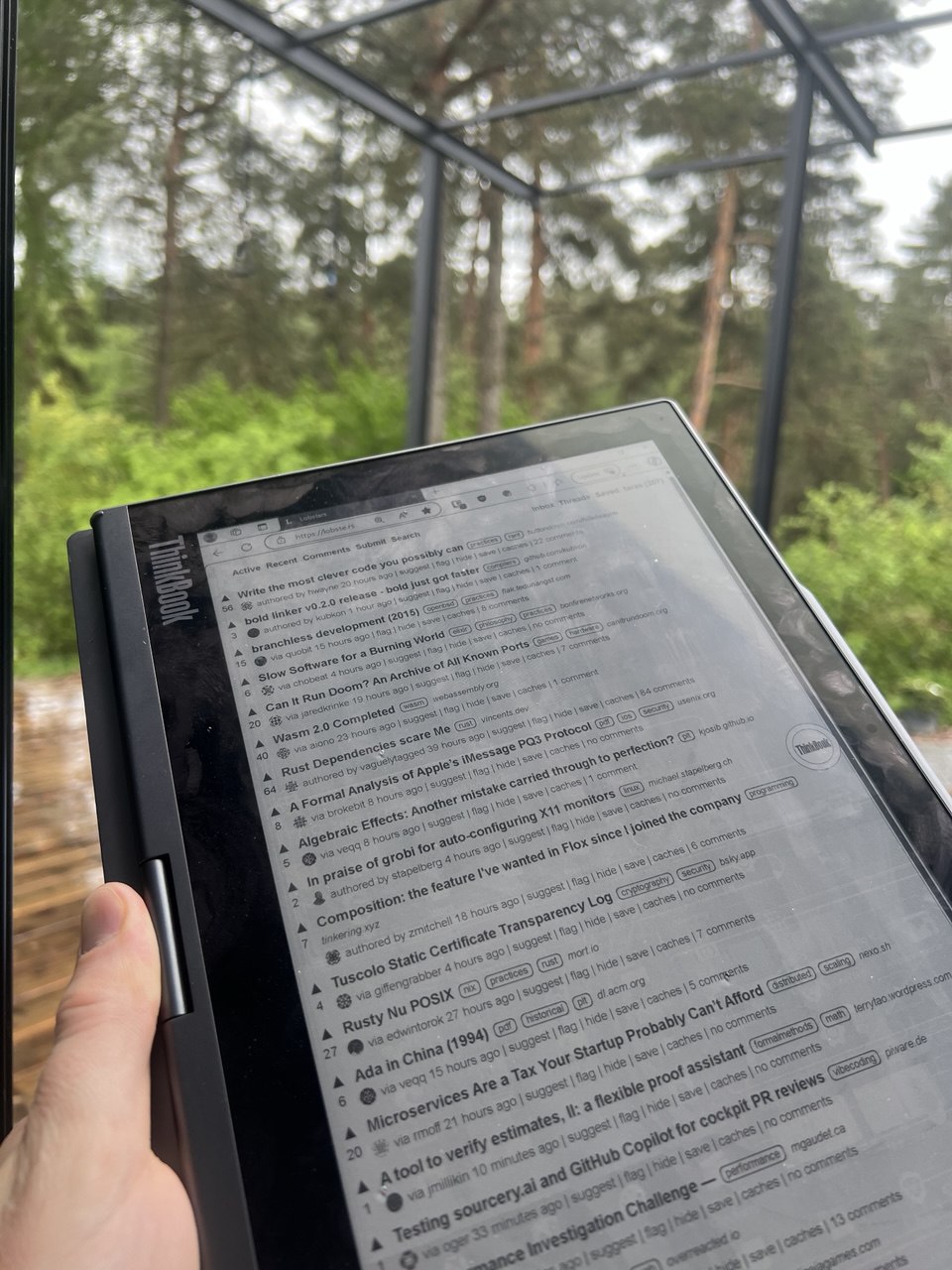

My E Ink Laptop: ThinkBook Plus Gen 4

I read a lot, blogs, papers, news, etc. My eyes are sensitive to artificial light so I prefer to read on e-ink. I also like to work outside, so things that try to fight the sun by feebly (relative to sun) shining even more light into my eyes are frustrating. I also find that e-ink screen limitations like slower updates and lack of color to be wonderful for not getting distracted by shiny pictures, videos. ...

Create Missing RSS Feeds With LLMs

It used to be that blogs all had RSS feeds. Somehow there are more blogs than ever before, but some people do not bother with setting up an RSS feed. The following blogs have nice content, but are annoying to follow without RSS. https://aider.chat/blog/ https://jkatz05.com/ https://getreuer.info/ Every time I stumble on one of these, it takes me a while to remember a fun workaround. I decided to write this down as a blog post so I have an easier time looking up the details. ...