13 GiB/s per core!

Sneller posted a blog on HN on how they use AVX-512 to decompress data at 13 gigabytes per second per core.

This a fantastic ad for their “lets turn logs on S3 into cheap database” product. This is a solution I wanted multiple times, will definitely consider them next time the need comes up.

Faster than RAM

Now this post did not get overlooked, but what did get overlooked is that the post engaged the clickhouse CTO. He posted a link to a presentation on how Clickhouse uses compression to process in-memory data faster than RAM bandwidth.

As a result of discussion in these comments, clickhouse might get even faster.

Thoughts

-

It’s damn cool that one can combine a large number of cores + compression to exceed memory bandwidth in some cases 🤯.

-

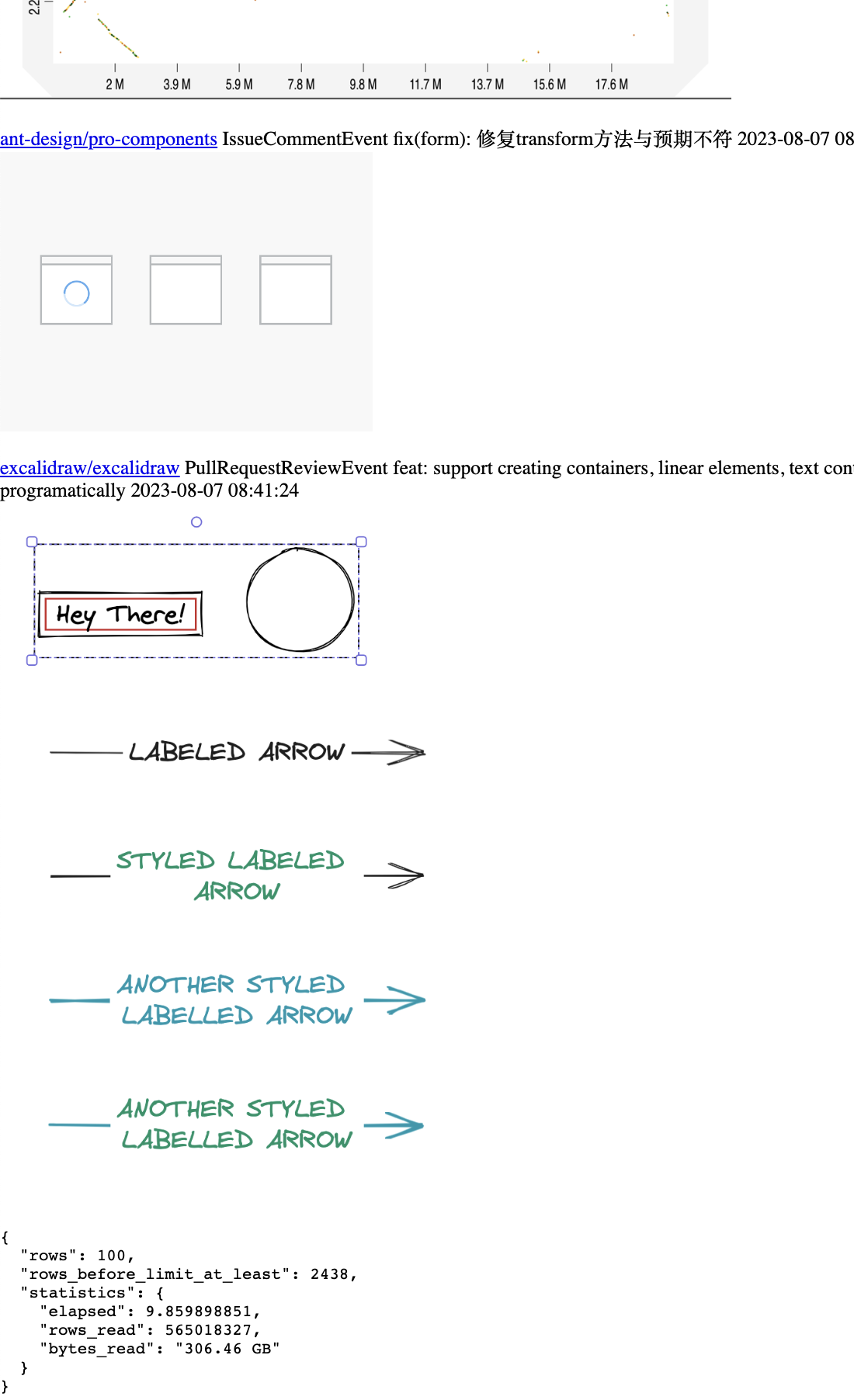

Will I use sneller for logs on S3? Or will clickhouse be a good enough and more general solution for this. I’m biased towards clickhouse as it’s a general purpose db. It’s general purpose enough to allow one to use it as a backend for stupid website that can scan all of github even history: Gitstagram.